Why Data Analysis:

- Data is everywhere.

- Data Analysis helps us answer questions from data.

Data Analysis plays an Important role in:

- Discovering useful information

- Answering Questions

- Predicting future or the unknown

Python Packages for Data Science:

A Python library is a collection of functions and methods that allow you to perform lots of actions without writing any code. The libraries usually contain built in modules providing different functionalities which you can use directly.

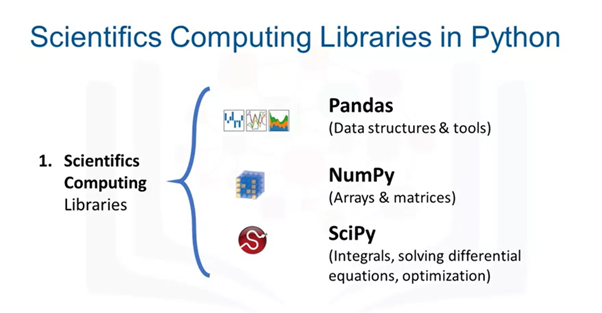

Scientific Computing Libraries:

Pandas: Pandas offers data structure and tools for effective data manipulation and analysis. It provides facts, access to structured data. The primary instrument of Pandas is the two-dimensional table consisting of column and row labels, which are called a data frame. It is designed to provide easy indexing functionality.

NumPy: The NumPy library uses arrays for its inputs and outputs. It can be extended to objects for matrices and with minor coding changes, developers can perform fast array processing.

SciPy: SciPy includes functions for some advanced math problems as listed on this slide, as well as data visualization.

Visualization Libraries:

Matplotlib: The Matplotlib package is the most well-known library for data visualization. It is great for making graphs and plots. The graphs are also highly customizable.

Seaborn: It is based on Matplotlib. It’s very easy to generate various plots such as heat maps, time series and violin plots.

Algorithmic Libraries:

Scikit–learn: Scikit-learn library contains tools statistical modelling, including regression, classification, clustering, and so on. This library is built on NumPy, SciPy and Matplotlib.

Statsmodels: Statsmodels is also a Python module that allows users to explore data, estimate statistical models and perform statistical tests.

Importing and Exporting Data:

Importing Data:

Data Acquisition is a Process of loading and reading data into python from various resources.

To Read any data using python’s pandas package, there are two important actors to consider:

- Format: It is the way data is encoded. We can usually tell different encoding schemes by looking at the ending of the file name. Some common encodings are CSV, JSON, XLSX, HDF and so forth.

- Path: The path tells us where the data is stored. Usually, it is stored either on the computer we are using or online on the internet.

Importing a CSV into Python:

In pandas, the read_CSV method can read in files with columns separated by commas into a panda’s data frame. Reading data in pandas can be done quickly in three lines.

It is difficult to work with the data frame without having meaningful column names.

However, we can assign column names in pandas.

We first put the column names in a list called headers, then we set df.columns equals

headers to replace the default integer headers by the list.

Exporting a Pandas dataframe to CSV:

To do this, specify the file path which includes the file name that you want to write to. For example, if you would like to save dataframe df as automobile.CSV to your own computer,

you can use the syntax df.to_CSV.

Exporting of different formats in python:

Analyzing Data in Python

we assume that the data has been loaded. It’s time for us to explore the dataset. Pandas has several built-in methods that can be used to understand the datatype or features or to look at the distribution of data within the dataset. Using these methods, gives an overview of the dataset and also point out potential issues such as the wrong data type of features which may need to be resolved later on.

Basic insights from the data:

- Understand data before beginning any analysis.

- Should Check:

- Data Types

- Data Distribution

- Locate potential issues with the data.

- Should Check:

Data has a variety of types. The main types stored in Pandas’ objects are object, float, Int, and datetime. The data type names are somewhat different from those in native Python. The below table shows the differences and similarities between them.

Why Check data types?

There are two reasons to check data types in a dataset:

- Pandas automatically assigns types based on the encoding it detects from the original data table. For a number of reasons, this assignment may be incorrect.

- The second reason is that allows an experienced data scientists to see which Python functions can be applied to a specific column.

When the dtype method is applied to the data set, the data type of each column is returned in a series.

To check the statistical summary of each column to learn about the distribution of data in each column.

The statistical metrics can tell the data scientist if there are mathematical issues that may exist such as extreme outliers and large deviations. The data scientists may have to address these issues later. To get the quick statistics, we use the describe method.

It returns the number of terms in the column as count, average column value as mean, column standard deviation as std, the maximum minimum values, as well as the boundary of each of the quartiles.

By default, the dataframe.describe functions skips rows and columns that do not contain numbers.

It is possible to make the describe method worked for object type columns as well. To enable a summary of all the columns, we could add an argument: Include equals all (include= ”all”) inside the describe function bracket.

Now, the outcome shows the summary of all the 26 columns, including object typed attributes.

We see that for the object type columns, a different set of statistics is evaluated, like unique, top, and frequency.

- Unique is the number of distinct objects in the column.

- Top is most frequently occurring object.

- Frequency is the number of times the top object appears in the column.

Some values in the table are shown here as NaN which stands for not a number. This is because that particular statistical metric cannot be calculated for that specific column data type.

Another method you can use to check your dataset, is the dataframe.info function. This function shows the top 30 rows and bottom 30 rows of the data frame.